For ML engineers & data scientists

Agility and openness

Develop Once, Deploy Anywhere

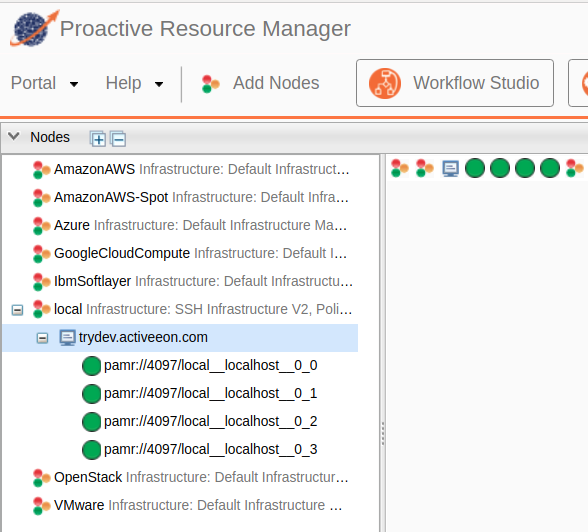

ProActive AI Orchestration is agnostic to the resource from development to production, which means that you can use it on any infrastructure:

- Benefit from an abstraction layer on the resource thanks to the Resource Manager with ProActive Nodes

- Run workloads locally, on-premise, in the cloud (Azure, AWS, Google Cloud, OpenStack, VmWare, etc.) and other hybrid configurations

- Move to production in minutes

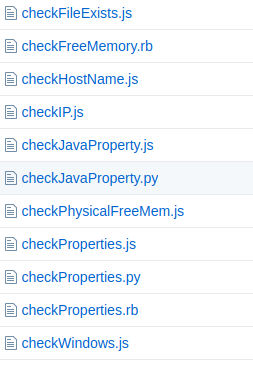

Scripted resource selection

Select dynamically the resource required: GPU, RAM, OS, lib, etc.

Select the most relevant resource:

- based on hardware requirements (GPU, RAM, etc.)

- based on location (Azure, AWS, OpenStack, VmWare, On-Prem, In France, In US, etc.)

- based on variable information (latency, bandwidth, etc.)

- based on OS configuration (Docker enabled, Python3 enabled, etc.)

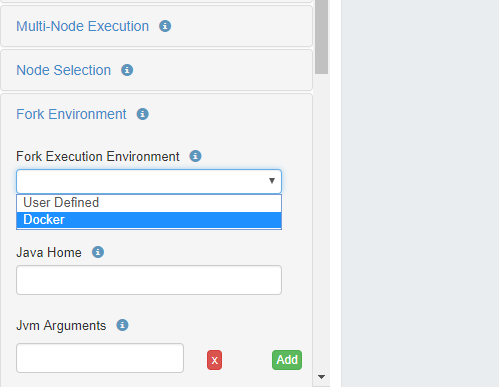

Simplified Docker Integration

Share files and variables across containers

- Variable propagation through containers

- File sharing through containers via Dataspace

- All the libraries available for any environment

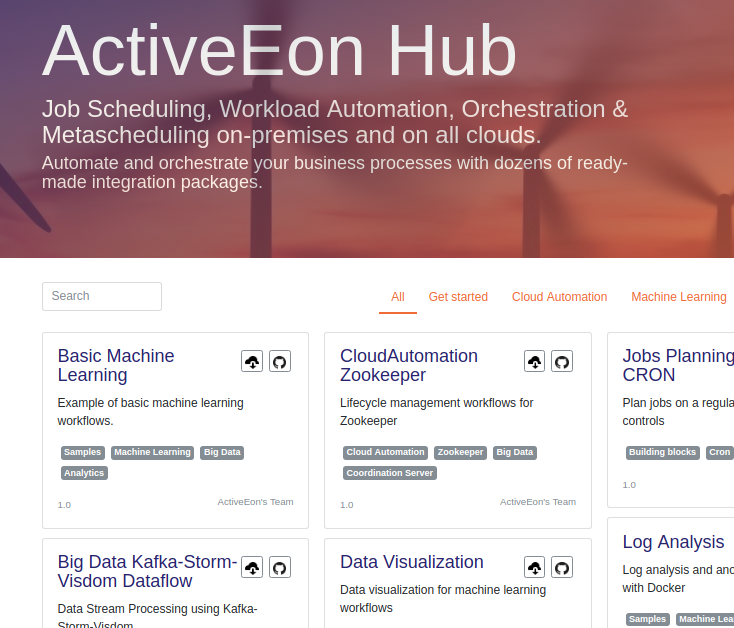

Benefit from a fully open system and leverage the best libraries. Set up a complete machine learning orchestration system with ProActive AI Orchestration.

- Integrate with any machine learning and deep learning libraries

- Extend Studio with custom packages import

- Or extend Studio with our community packages avalable on the Hub